Frank Dunn Kern was an American mycologist and phytopathologist. He was born in 1883, died in 1973, and spent much of his life studying and teaching botany at Penn State. His 37 years at Penn State are honored by the name of our graduate school building, the Kern Graduate School. Kern studied pathogenic rust fungus, a topic he referenced frequently in his talk for the Botanical Society of America’s symposium on “The Genetic Relationship of Organisms,” held on December 30, 1914. I recently found a transcript of his talk, later published in the American Journal of Botany, and couldn’t help but wish that Kern was still alive to give another lecture at Penn State.

His 1914 talk, titled “The Genetic Relationship of Parasites,” posed questions in regard to rust fungi, which 100 years later I find incredibly relevant when posed in the context of malaria. The CIDD seminar series has a tradition of allowing students to interview and meet lecturers post-seminar, but as Kern and I missed each other somewhere in the too-large generation gap, I wanted to have an imaginary interview, pretending to have just attended what must have been a fascinating seminar, and am basing his responses on the content of his lecture transcript. Kern’s talk, given before the era of Watson and Crick and the DNA-based field of genetics, covered broad topics ranging from the evolution of parasites, the genetic relationships between hosts in multihost systems, the taxonomic classifications of hosts and parasites, the evolution of sexuality, generalist vs. specialist trade-offs and virulence theory. An ambitious set of topics for one lecture but Kern covered all of them effectively. It should be noted that Kern did not study malaria, though I think good things would have happened if he had, and I am thus taking much creative license in hypothesizing what he would have said about the malaria system.

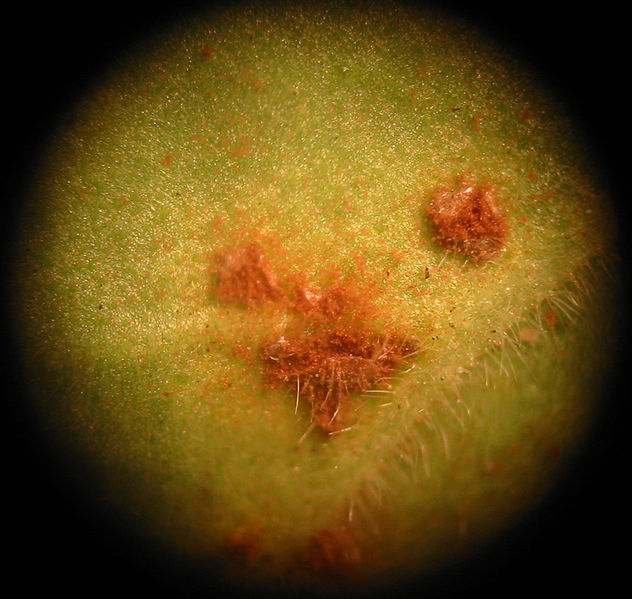

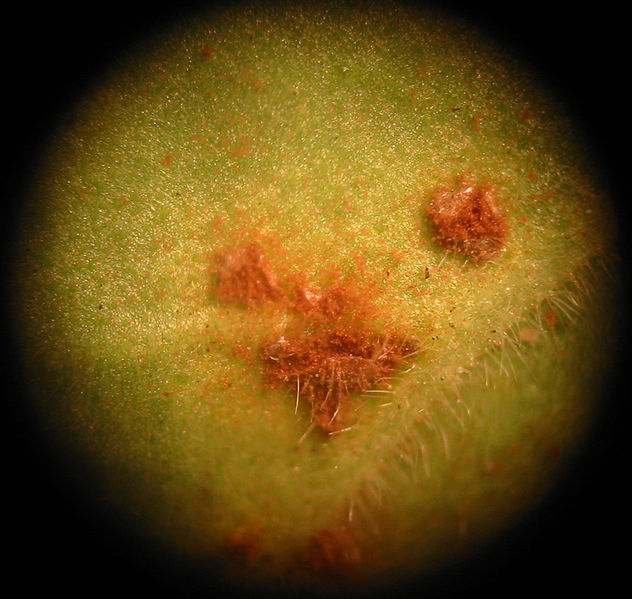

An up close photograph of rust fungus. Image courtesy of Wikimedia Commons.

Me: So Frank, I study malaria — how is rust fungus like malaria?

Frank Dunn Kern (FDK): Malaria and rust fungus are both heteroecious, meaning that they undergo both sexual and asexual phases of reproduction to complete their life cycles. Both separate the sexual and asexual life stages in different hosts, taking sexual forms in an intermediate host, and mostly asexual forms in the primary host. There is a tendency in rust fungi to have the “gametophytic hosts higher in classification than the telial hosts […].” Might there may be a similar trend in heteroecious protozoa, like malaria, in their asexual phase hosts vs. sexual phase hosts?

Me: We see that malaria vectors host the sexual phase of the parasites, while the vertebrate hosts harbor asexual phases. That is true for malaria across taxa and seems to be the case for trypanosomes and Leishmania major. I have no idea why this is the case. (If anyone knows I would love to have the theory explained to me).

FDK: In rust fungus, historic evidence from when the parasite was autoecious has indicated that evolution to heteroecious forms has resulted in the original autoecious host evolving to be the gametophytic host and the novel host taking on the role of the telial host. Understanding the evolution of malaria into its intermediate and primary hosts might help you in understanding whether there is a parallel in these systems.

To continue answering your question, there are other similarities as well. Both malaria and rust fungi are obligate parasites. Both are pleimorphic, showing very different phenotypes in different host environments. And, at a basic level of similarity, both are eukaryotes.

Me: Your lecture mentioned the use of parasites to better understand genetic relationships between host species, and thus to better organize taxonomic groups. If in 40 years we discover the nature of genetic material coding for differences between hosts, do you think we will see host-host relationships predicting genetic similarities more strongly than other factors such as morphologic similarities predicting genetic similarities? [Watson and Crick’s discovery of the double helix is not until 1953, 39 years after 1914]

FDK: When I started studying Gymnosporangium, I found that the fungus was not only found on hosts in the juniper and apple families, but also on a member of the rose family, and later on a member of the hydrangea family. Though these families were not always classified in the same order, further morphological studies reclassified them as such and my argument in favor of using parasites to show relatedness is favored by the reclassification grouping. In future research, when we can see the genetic material of these hosts and do a more precise comparison of similarity, I think we might find this trend in many types of hosts where the ability to share parasites might indicate shared genetic factors.

Me: We can ask that question better with an understanding of the genetic code. Humans are hypothesized to have acquired malaria from other apes, the same is true for SIV/HIV and Ebola. Is this a trend where humans are more likely to experience spillover from more closely related species? Do we acquire novel pathogens from other apes more frequently than from raccoons or bats or cats? It seems possible that we would be more likely to share similar pathogens with hosts similar in species to us. In the example of dogs acquiring parvovirus from cats with feline panleukemia virus, could that also be explained by genetically similar hosts more easily experiencing spillover than ones that are genetically very different? Were dogs any more likely than humans to get a feline virus or were they just victims of chance? If the genetic similarity theory has credence, than dogs were more likely because of their genetic relatedness to cats. It seems to me like humans are more likely to be infected by diseases of other mammals than by diseases of reptiles or amphibians, or plants.

FDK: That is something future research on genetics may be able to tell us. Hopefully we will get an answer.

[End of the interview].

Imaginary interviews are wonderful because I can end the conversation whenever I want, rather than waiting for the already-decided end time or for a socially appropriate point in conversation. If you have never read Kerns work, I think it deserves reading by malariologists. Rust fungi and malaria have similar evolutionary history and I wonder how much of their evolution can be explained by the same story. Did malaria evolve from being autoecious in humans similar to the rust fungus in juniper? When malaria spills over into novel hosts can these host types be predicted by host genetics? Is there a pattern in which host environment asexual forms prefer and which host environment sexual forms prefer? And what explains this pattern?